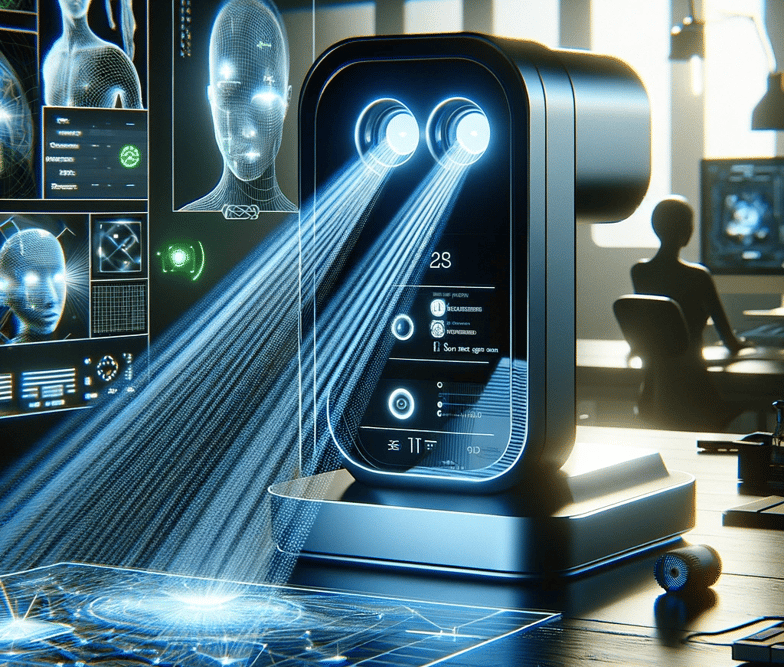

How LLM reads an image

ChatGPT, Bard and other LLMs provide an option to upload image and read data from it , How does that actually work ? Let's decode that in this post

Kedar Jois

12/6/20231 min read

/Decoding Image to Text Processing

Large Language Models(LLMs) based chat interfaces provides us the opportunity to ask queries , problems through text prompts and also image and video formats.

This idea fascinated and pushed me to decode its exact root , how does it actually convert an image into text !

/Tesseract

As cool as the name sounds, Tesseract is an open source Optical Character Recognition ( OCR ) engine by Google

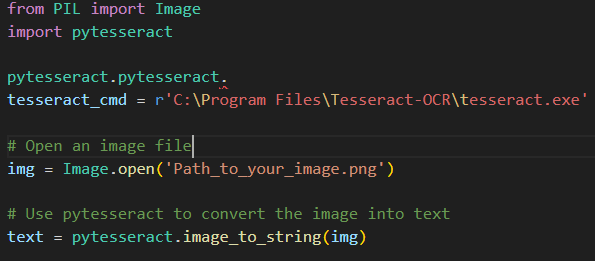

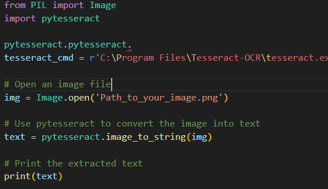

All you have to do is import "Pillow" which is a fork of PIL(Python Imaging Library) and Pytesseract ( a python wrapper for Google's Tesseract to extract text from image.

Start off by installing Pillow -> pip install Pillow

Install Tesseract from Github - tesseract-ocr/tesseract: Tesseract Open Source OCR Engine (main repository) (github.com)

Set the path in your system/user variable

/Time to extract the text